AI has emerged as a transofrmative force in almost every field, and cybersecurity is no exception. It has found use as a weapon - but also as a shield. At Patchstack we're working on using AI for the good, as a tool to find and fix open-source vulnerabilities faster.

In this article by our very own Ibad Rehman (originally published on Linkedin), he pulls back the curtain a bit on how Large Language Models have changed the threat landscape, and how AI could be used to deal with threats.

The capabilities of LLMs in executing cyber attacks have become a growing concern for professionals across various sectors. If you're someone who's navigating the intricacies of cybersecurity, understanding the potential risks and implications of these models is crucial.

How Can Language Models Be Used in Cyber Attacks?

Phishing Attacks Crafted by AI

The evolution of phishing attacks has taken a significant leap forward with the integration of large language models (LLMs). These sophisticated AI systems can now craft emails and messages that are indistinguishable from those written by humans. By analyzing vast datasets of legitimate communications, they can mimic tone, style, and content with alarming accuracy. This capability means that phishing attempts are more likely to deceive recipients, as the emails can be personalized and appear entirely credible, bypassing traditional detection methods that look for odd phrasing or errors commonly found in less sophisticated scams.

Automated Vulnerability Detection

Another area where LLMs excel is in the automated detection of vulnerabilities within software and systems. By understanding the intricacies of cybersecurity-related text, data, and patterns, these models can sift through code, identify potential weaknesses, and even suggest exploits.

This is a double-edged sword; while it can significantly enhance a cybersecurity team's ability to protect their assets, in the wrong hands, it also provides a powerful tool for identifying targets for cyber attacks. The speed and efficiency with which LLMs can analyze and understand complex systems make them invaluable for both defence and offence in the cyber realm.

Malware Creation and Spread

Finally, the creation and dissemination of malware have been greatly facilitated by the advent of LLMs. These AI systems can generate code, create malicious software that can evade detection, and even tailor the malware to exploit specific vulnerabilities found in targeted systems.

Additionally, the spread of malware is made more efficient by using LLMs to craft convincing messages that encourage users to unwittingly install harmful software. The sophistication of these messages and the malware itself means that even well-protected systems are at risk, as traditional security measures struggle to keep pace with the rapidly evolving capabilities of AI-generated threats.

From crafting highly convincing phishing emails to detecting vulnerabilities and creating sophisticated malware, LLMs represent a significant shift in the landscape of cyber threats. As these models continue to evolve, so too must the strategies employed by cybersecurity professionals to protect against these increasingly sophisticated attacks.

LLMs vs Static Code Analyzers

In the above context, I would like to talk extensively about the paper I recently read Can Large Language Models Find and Fix Vulnerable Software by David Noever.

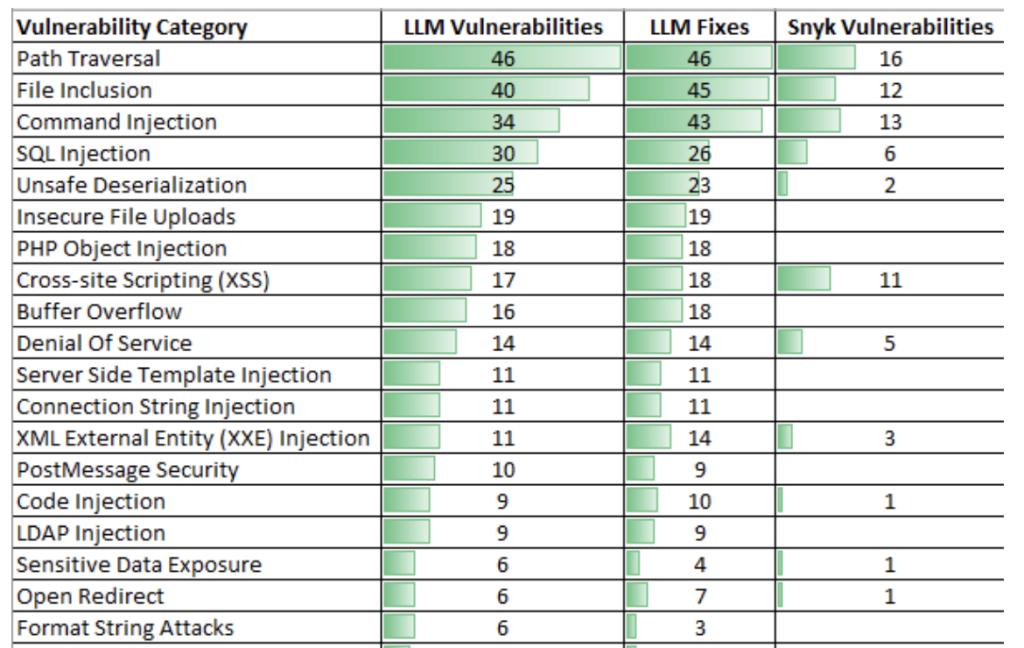

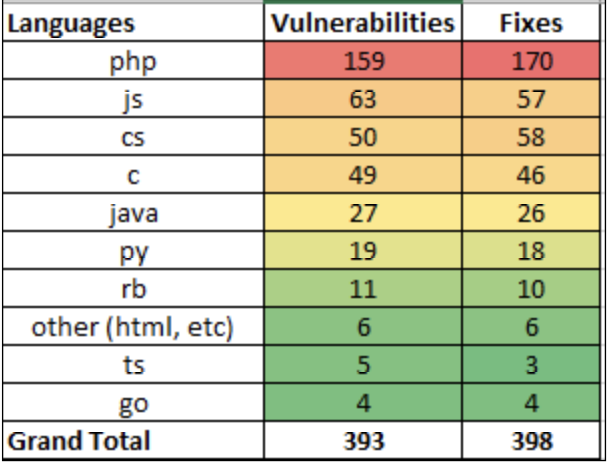

In this paper, SOTA LLMs such as GPT-4 were tested against traditional static code analyzers such as Snyk and Fortify. The tests encompassed 129 code samples across eight programming languages, revealing the highest vulnerabilities in PHP and JavaScript.

Six public repositories on GitHub were submitted to the automated static code scanner, Snyk, to illustrate the plethora of identifiable vulnerabilities and the breadth of language problems addressed by LLMs.

GPT-4 identified approximately four times the vulnerabilities than its counterparts and provided viable fixes for each vulnerability, demonstrating a low rate of false positives. A critical insight was LLMs' ability to self-audit, suggesting fixes for their identified vulnerabilities and underscoring their precision.

As a final evaluation, all 129 code samples were submitted to GPT-4, seeking corrected code only. The corrected code was then resubmitted to Snyk to compare against the vulnerable codebase, scoring the self-correction capabilities of LLMs.

An example showed that GPT-4 not only identified vulnerabilities but also offered plain English explanations of why the vulnerability arises and how an attacker might exploit it.

GPT-4 found 393 identified vulnerabilities, almost twice as many as GPT-3 (213) and four times the number found by Snyk (99).

Real-World Examples of AI in Cyber Security

AI-Driven Security Systems

As the digital landscape evolves, so too does the sophistication of cyber threats. In response, AI-driven security systems have emerged as a critical line of defence. These systems leverage machine learning to adapt and respond to threats in real time. By analyzing patterns and behaviours, they can identify potential threats before they materialize.

For instance, companies like LogRhythm are revolutionizing how organizations approach cybersecurity. Through comprehensive solutions that encompass end-to-end security, LogRhythm utilizes machine learning not only to detect threats but also to identify compromised accounts and unusual activities that could indicate a breach. This proactive approach to security underscores the power of AI in safeguarding digital assets and information.

LLMs in Threat Intelligence and Response

Large Language Models (LLMs) are playing an increasingly pivotal role in the realm of threat intelligence and response. These sophisticated AI models can process and analyze vast quantities of data at an unprecedented scale. This capability allows them to identify and understand complex cyber threats, including those that have never been seen before.

For example, Cybereason's AI-powered analytics platform exemplifies how LLMs can enhance threat monitoring and analysis. By providing deeper visibility into security environments, Cybereason enables organizations to detect and respond to threats more effectively. Its AI-driven technology automates the detection process, making it easier for security teams, regardless of size or expertise, to stay one step ahead of potential attacks.

The integration of AI and LLMs into cybersecurity represents a significant shift in how we protect against and respond to cyber threats. From enhancing security systems to providing advanced threat intelligence, the capabilities of these technologies are reshaping the cyber defence landscape. As cybercriminals become more sophisticated, the role of AI and LLMs in cybersecurity will only grow, offering both challenges and opportunities in the ongoing battle against cyber threats.

Potential Risks and Benefits of Large Language Models

One of the most promising aspects of LLMs is their potential to revolutionize cybersecurity practices. By automating complex tasks such as sentiment analysis, customer service, and fraud detection, LLMs can significantly reduce the burden of manual labour, cutting costs and enhancing efficiency.

Moreover, their ability to generate human-like text and make predictions based on vast datasets allows for more personalized and satisfying interactions with technology, improving both availability and customer satisfaction.

In the context of cybersecurity, these models can be trained to identify and respond to threats more quickly and accurately than ever before. By sifting through enormous quantities of data, LLMs can detect subtle patterns indicative of cyber attacks, potentially stopping hackers in their tracks before any real damage is done. This proactive approach to security could significantly reduce the incidence of data breaches and other cyber crimes, safeguarding sensitive information for businesses and individuals alike.

Risks of Misuse by Malicious Actors

However, the power of LLMs is a double-edged sword. Just as these models can be used to protect, they can also be exploited by those with nefarious intentions. The ability of LLMs to generate convincing, human-like text makes them potent tools for crafting phishing emails, fake news, and other forms of misinformation. Malicious actors could leverage these capabilities to manipulate public opinion, steal personal information, or even destabilize financial markets.

Moreover, the concerns surrounding bias, inaccuracy, and toxicity in LLM outputs cannot be overlooked. If not carefully managed, these issues could undermine the effectiveness of cybersecurity measures, leading to flawed decision-making and potentially exacerbating the very problems they aim to solve. Ensuring that LLMs are trained on diverse, accurate, and unbiased data sets is therefore crucial to maximizing their potential benefits while minimizing risks.

As we continue to explore the capabilities and applications of large language models, it's essential to approach their development and deployment with a balanced perspective. By harnessing their strengths and mitigating their weaknesses, we can unlock the full potential of LLMs to enhance cybersecurity measures while remaining vigilant against the risks of misuse by malicious actors.

Future Trends in AI and Cybersecurity

As we navigate deeper into the digital age, the evolution of cyber threats becomes an increasingly complex puzzle. With Check Point Research highlighting AI developments and ransomware among its top cybersecurity predictions for 2024, it's clear that we're on the cusp of a significant transformation in the cyber threat landscape. The anticipated cost of global cybercrime, expected to exceed $23 trillion by 2027, underscores the urgency in understanding and preparing for these evolving threats.

The sophistication of cyber attacks is expected to grow, leveraging artificial intelligence to bypass traditional security measures. As AI technologies become more advanced, so too do the tactics of cybercriminals, creating a perpetual arms race between threat actors and defenders. This trend points towards a future where predicting and preemptively countering cyber threats will require deep integration of AI into cybersecurity strategies.

The Role of AI in Shaping Cyber Defense Strategies

The role of AI in cybersecurity is becoming increasingly indispensable. Companies like LogRhythm and Cybereason are at the forefront, utilizing AI to enhance threat detection and response. These organizations leverage machine learning to identify unusual behaviour that may indicate a security threat, from compromised accounts to privilege abuse. This approach not only speeds up the detection process but also improves the accuracy of identifying potential threats.

AI-driven cybersecurity solutions offer a myriad of benefits, including enhanced identity management to prevent unauthorized access, real-time monitoring for comprehensive protection, and improved visibility to uncover hidden security gaps. By automating the detection and response processes, AI enables IT and security teams to operate more efficiently, focusing their efforts on the most critical issues identified through precise, data-driven insights.

As we look towards the future, the integration of AI into cybersecurity strategies is not just an option but a necessity. The dynamic nature of cyber threats demands a similarly dynamic defence mechanism, one that can adapt, learn, and respond at the speed required to protect digital assets effectively.

Companies like Crowdstrike, Palo Alto Networks, and Darktrace are leading the charge, merging AI and cybersecurity to safeguard the digital frontier. Their work exemplifies the potential of AI to not only respond to threats but to anticipate them, offering a glimpse into a future where cybersecurity defences are as intelligent and adaptable as the threats they aim to thwart.

In conclusion, the interplay between AI and cybersecurity is set to redefine the battleground for digital security. As cyber threats evolve, so too must our strategies for defence. By harnessing the power of AI, we can turn the tide against cybercrime, protecting our digital world with smarter, more responsive cybersecurity solutions.

Wrapping Up

In conclusion, the capabilities of large language models in executing cyber attacks underscore a pivotal moment in our digital era. As these models evolve, so too does the landscape of cybersecurity threats.

The double-edged sword of advanced AI technology presents a paradox; it harbours the potential for both groundbreaking advancements and unprecedented vulnerabilities. This duality demands a proactive and dynamic approach to cybersecurity, where continuous learning, ethical AI development, and robust defensive strategies become the pillars of our digital defence.

As we stand on the brink of this new frontier, the question is not if we will adapt to this challenge, but how swiftly and effectively we can do so. The future of our digital world depends on it.